A recent innovation has the academic community abuzz with debates. If you’re on any social media or are generally not living under a rock, you have probably come across the innovation that is ChatGPT. Since its launch in November last year, the Artificial Intelligence (AI) chatbot has stirred up conversations left and right, ranging from discussions on technological utopias to those on AI ethics and the effects of such technology on employment.

But we’re not here to ponder upon questions so profound. Rather, we are here to investigate the curious case of missing papers and the implications this case has on adapting ChatGPT in academia. And with the recent release of GPT-4 (the Large Language Model used to train the chatbot), it is easy to see how this technology could be used by students and professors alike. Before we move on to that, let’s learn more about this AI-powered bot.

What is ChatGPT?

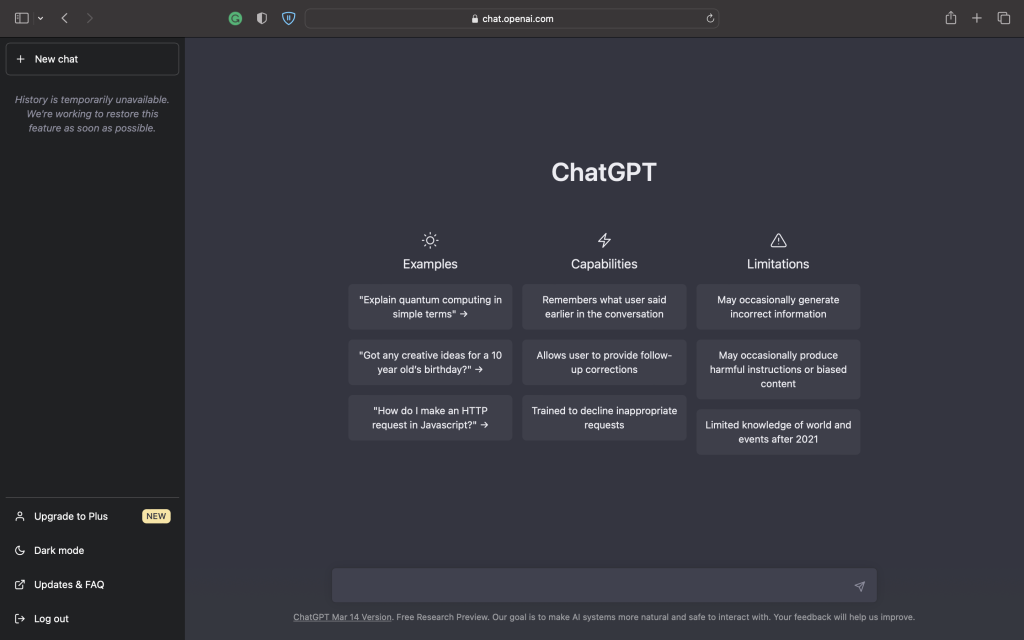

ChatGPT is a natural language processing tool driven by AI technology that generates human-like responses based on inputs/prompts given by users. Created by OpenAI, a research and development company in AI technology, ChatGPT was estimated to have reached 100 million monthly active users in January, just two months after its launch (Reuters). To learn more about the bot, check out our guide on ChatGPT. For now, let’s move on to the phenomenon that has made users weary of the chatbot.

More on the Curious Case of Missing Papers

With the public just recently discovering the potentials of AI technology in everyday life and the constant experiments at play, the bot has inevitably left the academic world in a bit of a stir. As academics, we look at the best ways to adopt such technology into our world. Eager attempts are being made to restructure conventional evaluative methods and create policies for the informed use of AI technology (spec. ChatGPT) in academia. Criticism, though, is to be expected, especially when the AI-powered bot has listed a few of its own limitations on its interface. Modest, that one.

Now the Curious Case of Missing Papers I speak of did not simply emerge one fine day to the public eye, nor is it restricted to just one occasion. Rather, over the past two months, as ChatGPT has seen more active users, it has simultaneously gained more weary “consumers,” mainly in the form of students (or other learners) and professors (or other evaluators). Students working on assignments and professors have written on various social media platforms (such as Twitter, Reddit and other academic forums) and created articles claiming that ChatGPT tends to produce references that do not exist in real life. They say that when asked about a particular topic (given a prompt), the bot does give a legitimate-sounding answer (a response). But then, when asked to reference the information it has produced, it tends to create nonexistent academic sources, often a mix of names within the field or a set of numbers in a link that go to articles about similar topics. Sometimes though, it cites sources which have absolutely no reliable background.

Why is the bot producing such seemingly “fake” information? Well, this phenomenon is not altogether unheard of and has been an avid topic of discussion in AI for quite some time. Some experts call the phenomenon ‘Hallucinations,’ with the word holding much the same meaning it does when it comes to human psychology. A hallucination occurs in AI when the AI model generates output that deviates from what would be considered normal or expected based on the training data it has seen. Other experts claim that it is “AI confabulation” (another term borrowed from human psychology) or “stochastic parroting” due to predictive modelling. It seems, then, that while reasons for the missing or nonexistent sources differ, the result is pretty much the same, namely, a greater risk of misunderstanding and misinformation.

But what is the point of a source that one cannot trace back? Is it reliable when it doesn’t even exist? What is the point of research that does not contribute to one’s knowledge of the topic or the larger literature? And what are the more daunting implications we are yet to discover? While the answers to each of these questions are unique to our work and specific aims, it is undeniable that such cases create a great conundrum for users seeking more than just inspiration from the bot.

What Can We Do Moving Forward?

It seems then that with seemingly abundant knowledge available at a single click, the merits of the technology are evident and laudable. The impact it will have on the academic world, too, is inevitable. However, as users of novel technology, it becomes our responsibility to be wise “consumers” and make well-informed decisions. A best practice would be to learn more about the tools we use during the research process and, for as long as possible, to rely more heavily on self-conducted (but well-assisted) research.

That was our take on ChatGPT and the Curious Case(s) of Missing Papers, do let us know what you think in the comments!