I wanted to do a review of the 3 big citation indexes (Web of Science, Scopus, and Google Scholar) at 3 different levels (articles, authors, and journals), since there have been some noteworthy changes this year. Citations are only a part of the story so I will point out when alternative metrics are available, such as views and mentions on social media.

A bit of history: Web of Science was launched as the Science Citation Index in the 1960s, by the Institute for Scientific Information. One thing that they did that set them apart while they were gathering information from journals, was to include each paper’s list of references. It seems like a small thing but it revealed the relationships between papers, and also provided citation counts. Web of Science did not have any real competition as a citation index until 2004, with the launch of both Google Scholar and Scopus (from the publishing giant, Elsevier). While Web of Science is deep, indexing over 100 years of journal content, it is selective and therefore the coverage is not as wide as Google Scholar or Scopus.

- Article metrics

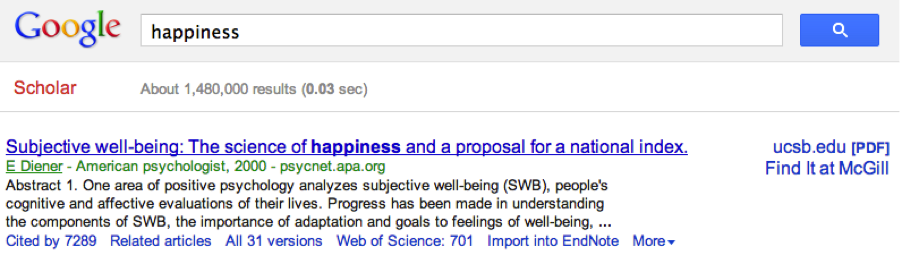

Citation counts may vary between the indexes, depending on their coverage of a subject. It is interesting to explore each one, and necessary to indicate where a count is coming from. The number is often highest in Google Scholar, since it can link to non-journal content like presentation slides.

Citations are useful in general because they allow you to move forward in time, finding newer papers that may be of interest. If a paper is important, you can always set up a citation alert to receive email notifications. Sorting your search results in Web of Science and Scopus by citations will also help you find those seed papers that are often referenced in your research area.

New in beta in Web of Science is the presence of enriched cited references in some records, with specifics on where in the text an article is cited, how many times, and in connection with which other references. They highlight hot papers, those published in the last two years with an unexpectedly high citation count over the most recent two months for their field. They also make it easy to find highly cited papers from the last 10 years.

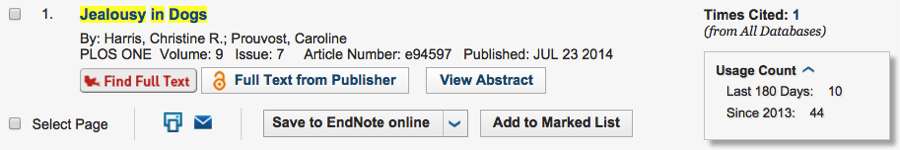

Item-level usage counts came much later in Web of Science when people became interested in seeing alternative metrics. They count how many times people click on the full-text or export an item to a citation management program like EndNote (EndNote is available from McGill Library!). You can see which papers people are paying attention to in the last 180 days, or all time (really since 2013, when they began counting).

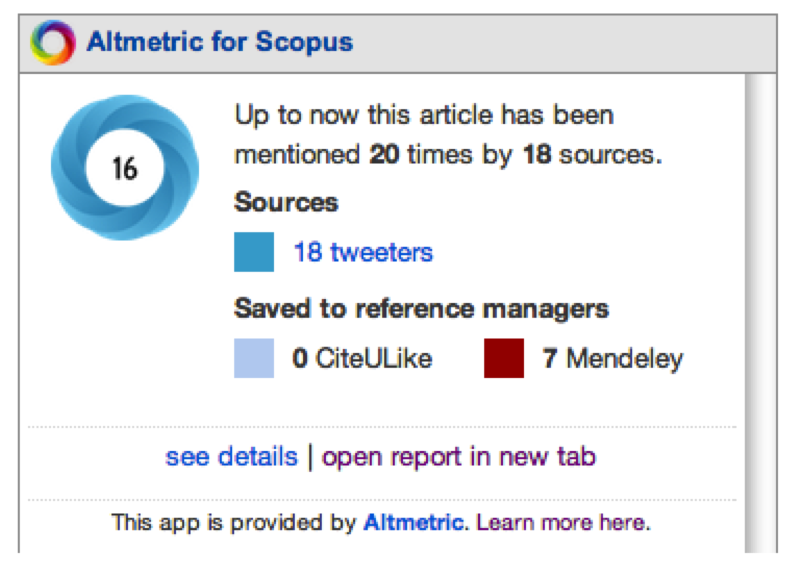

Scopus does have view counts, but they take alternative metrics further by integrating PlumX Metrics with 5 categories: Citations, usage (clicks and downloads), captures (bookmarks), mentions (blog posts, Wikipedia, etc.), and social media (tweets, Facebook likes, etc.).

2. Author metrics

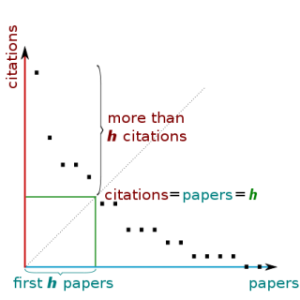

Article citations are used to calculate author metrics. A popular metric is the h-index, where the number of citations an author has received meets their number of published papers (read about this index in Hirsch’s article in arXiv). Some criticisms of the h-index are that it is dependent on the age of the researcher and also on their field, so it shouldn’t be used for comparisons.

When searching for author metrics it is useful to have these identifiers on hand, if possible:

- ORCID (author registration required)

- Web of Science ResearcherID (author registration required)

- Scopus Author Identifier (automatically assigned to authors but the profiles can be claimed and verified)

- My Citations profile in Google Scholar (created by authors)

A new visualization in author profiles in Web of Science is the Beamplot, with citation data going back to 1980. Individual points on the plot represent the citations for a given paper, divided by the mean for papers in the same Web of Science subject category from that year.

3. Journal metrics

The Journal Impact Factor and other metrics for journals indexed in Web of Science are published each year in Journal Citation Reports. Web of Science is now a collection of subject indexes and Journal Impact Factor data is provided for journals in the Science and the Social Science Citation Index. This year, Journal Citation Reports has expanded to include the Arts & Humanities and Emerging Sources Citation Index journals, with their new metric: Journal Citation Indicator. It allows for comparison of journals across disciplines.

There is an updated CiteScore methodology in Scopus with a 4-year publication window (the Journal Impact Factor has 2 years to build up citations). You can choose to rank only open access journals in a subject by CiteScore. You can also find out what percentage of a journal is made up of review articles (reviews are often highly cited), or is never cited at all, by using the Scopus source comparison tool.

Google Scholar does have a metrics page that ranks journals by h5-index (h-index for articles published in the previous 5 years). They can be organized by category and sub-category.

Journal metrics are not meant to be used to judge the research of individuals, but they can come in handy when you are deciding on where to publish your research. Still, they are no substitute for the advice of trusted experts.

I probably went on for too long, so please let me know if you have any questions!